[Dive Deeper]

The team has struggled with the project many times throughout this semester, but every time a dilemma arises, there has always been a way to get out of it. “Dive deeper” has been really important to help us to achieve that.

Throughout the semester, there have been many progress reports that pushed us to progressively solve the identified problems. Each report has been associated with a new concept of big data – from basic data preliminary analysis, temporal/spatial analysis, data visualization, and prediction model. The concepts have been all very new to us, and the requirements of these have been even harder. Therefore, our team has been dedicated to dive deeper into each report to exceed expectation regardless of the limited time and technical ability.

Here is an example that we have done to dive deeper into the data source. Initially, we have tried to use different data source formats – Tumblr, Twitter, and Reddit. A lot of efforts have been spent collecting the data, but when we have come to the “temporal/spatial analysis” report, the on-hand data set appears to be not good enough for us to run a complete analysis. Such as Tumblr data set, there is no user profile information nor time stamp information available for us; Twitter data set, streaming API returns very limited information. By that moment, the on-hand data set is barely sufficient for the report but definitely cannot exceed the expectation.

Next, our team divides into two groups to look for the insightful analysis to the data set. Even though Tumbler has not worked out very well due to the API limitation, Twitter data has been incredibly expended. Not only the streaming API has been used, but also the user timeline API and the user profile API have been added to collect a much more complete data set. Owing to this, our final prediction model – the team project’s ultimate goal – has heavily rely on the twitter data set.

It is known to all that the complexity of Big data comes in many ways. “Dive deeper” into the big data set metaphors the perseverance and dedication. Especially like our team, pure amateurs to the real big data area finally use big data as the tool to successfully run the prediction model. Surely, the result may be off to the real world, but the point is that we have learned an approach to solve the problem, to make big data “insightful”, and finally to dive deeper not only into the solid data set but also our minds. Despite all the other terminologies and technologies, dive deeper has helped us throughout the semester to make big data vivid. Certainly, the concept of knowing to dive deeper shall help all of our team members’ prosperous future career life.

[Setting the Goal]

The most crucial lesson learned from our team project has been to have a clear vision before diving deep into the analysis. At the start of our project, we begin by collecting as much data as possible immediately after settling down the topic – sleep paralysis. Since we have anticipated that it will take us a long time for data collection and cleaning, we want to take action as soon as possible. Even though everyone in our team know and understand the importance of defining a goal at the start, we have failed to do that. As a result, we have struggled with the goal of our analysis and prediction almost every step of the way. Fortunately, we have been able to set the purpose of the data analysis before performing the technical analysis work. However, we have felt being lost during certain times of the project. If we have had the purpose of the sleep paralysis clearly thought out and defined, the project should have gone much more smoothly.

The true value of any data analysis, including big data, lies in the goal of the analysis. In the age of information explosion, the challenge we are facing is no longer not enough information. Instead, it’s too much information. How to survive the waves of information without losing our purpose is the key question not only in data analysis but also in life. An appropriate and meaningful goal is the first step of success.

Setting a good goal for data requires consideration of multiple aspects. Below is the three most important aspects that our team learned from our project:

• Limitations

During our data collection stage, we have used APIs from Twitter, Tumblr and Reddit. One thing that we have not prepared to face is that they only offered very limited data. That has been very frustrating for our team since one of the essential part of big data is about the temporal and spatial information at an individual user level. When setting the goal, these limitations should be taken into consideration.

• Data Set Attributes

Understanding the data set we will be working with has been crucial before setting the goal. We have learned late in the project that one third of the location information in the Twitter dataset has been invalid. Such exploratory findings have been vital in deciding the direction of the analysis. For example, if we have had high quality location data of the sleep paralysis patients, we then will focus our data analytic on locations.

• Business Value

Business value is the core component of big data analysis, and it’s the part that we struggled most when trying to set a goal for analysis. For sleep paralysis, it’s hard for us to think of appropriate business scenarios due to lack of domain knowledge. The domain knowledge can help us to dive deeper into the analysis and guide us each step of the way.

[Data Collection & Data Cleaning]

This is the first course that requires us to collect streaming data and not just download data sets from websites. This is also the first course that covers the whole process, from data collection to model construction. This means that we have to begin this project from scratch. Before this course, we usually searched everywhere to find qualified data sets to do analysis. If we have several data sets, we compare each of them and try to find the one that is the easiest for us to build models and do analysis. This means that we tended to find data sets that are already cleaned by others. Therefore, all we have had to worry about is how to build models and make predictions. However, this is not what doing data analysis is all about.

This course helps us realize that “collect data” and “clean data” usually take most of the time. Before building models, we have to decide how we are going to collect our data. Are we writing programs to collect data from websites, or designing questionnaires and sending out surveys? If we are going to collect data from websites, what techniques should be used to perform that? These questions are something that should be answered before we even begin our project. After that, we need to clean and transform our data. This process takes most of the time, which is different from what we used to think. We need to decide what format fits our project the most and how we are going to save our data. Besides, we also need to determine which part of the data is valuable and can be used for further analysis, while which part of the data is not important that we have to discard. We believe this process is the most important part of the entire project since we spent hours of hours debating this. Therefore, in this course, we have learned how to select and decide the project goal, determine proper methods to collect data, and audit data for quality.

[Gephi and Network Analysis]

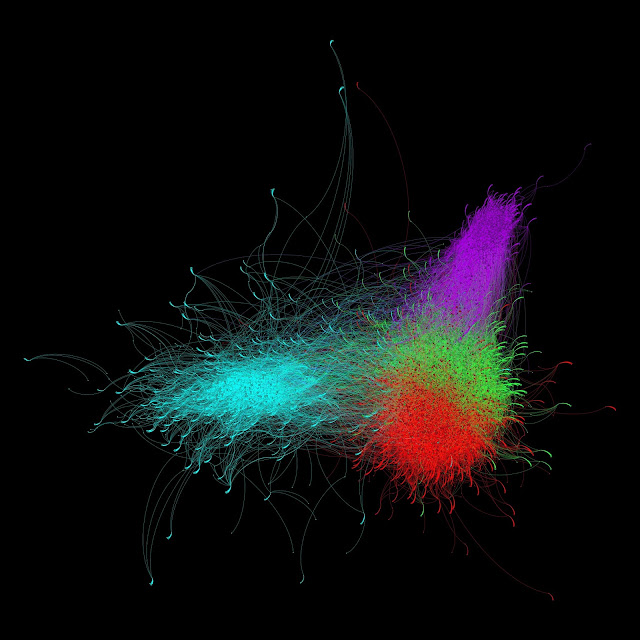

Beyond the learning curve of data collection and data cleaning, we have enjoyed to use Gephi to visualize our network. It is a powerful tool to analyze and present network. We are proud that our “artwork” is displayed to public as part of Eller MIS department.

During the visualization assignment, we have had some technical issues with Gephi. Our network that we have tried to input to Gephi has been too large. The software has been able to run but it usually crashes in the middle of progression. It has taken us many hours and days to load data in, calculate centrality and visualize the network. The lesson learn that we have gotten from this process is to use XML file to modify network outside Gephi. XML file records all data for each node and edge from locations to colors and centrality values. Here are some sleep paralysis community visualizations which we created after visualization assignment:

The entire network

Four communities in the network

Another four communities in the network

On the other side of struggling, Gephi gives us multiple options to analyze network and ask questions. When we looked at our network, we curiously ask:

+What community is this node belong to? What are the common things of that community?

+Why does it belong to that community? Why does this node have highest/lowest betweenness/closeness centrality?

+Who is this node? Who does this node connect to?

+How does this node impact on other nodes and network?

Those questions help us to understand and dive deeper into the network. Then, we realize that the more we answer our own questions, the more questions we want to ask. Many things are revealed and discovered during question-answer exercises in our team. For example, according to our network, many people who said they have experienced sleep paralysis, connect to some sort of heavy metal music accounts in Twitter. Due to the time limitation of this semester, we cannot verify if there is a real relationship between sleep paralysis and heavy metal music. However, it would be a good research to discover more about what causes sleep paralysis. Then, we will be able to build a prediction model to predict who potentially has sleep paralysis. In addition, this idea can also be expanded to a network of people who can have a high stress level, being in depression or think about suicide. Those symptoms can be one of many factors that increase the number of massive shootings in the last 5 years in the world. If we can build a prediction model on this subject, we will be able to help depressed people and prevent potential massive shootings.

[Network thinking]

During the course of 4 months, Dr. Ram and Devi have completely changed our understanding of big data and have introduced a whole new perspective about big data analytic. Our team wants to make a specific note about Network Thinking. Before we took this class, each of us had done some data analysis on our own. From the previous experience, identifying a topic was the most difficult part. The reason being is that we always start from examining the available data sets. When certain information is unavailable, we tend to give up. This selection process rules out a lot of interesting topics.

In this class, Dr. Ram introduced network thinking. This can be used in any topics especially social media analysis. Network Thinking allows us to incorporate data sets and information related but not directly from the study target. For example, our group have studied about Sleep Paralysis in the social media network. Not only have we collected data about sleep paralysis patients, but we also identified their common interests by examining their interactive behaviors in social media. It is impossible to achieve this with the traditional analysis.

With Networking Thinking techniques, we are tapping into the future of data analysis. According to Dr. Albert Barabasi, people in this industry are becoming aware of the network effect due to technological advances. However, we are still in the stage of using Network Thinking barely as a buzzword. In the article “Thinking in Network Terms”, Dr. Barabasi explained the several stages we have to overcome to be able to use this power. The first stage is thinking in network terms, which is exactly what we were taught by Dr. Ram in this class.

We have been impressed with the Smart Campus and the Aschma Emergency Room Visit examples, which are perfect demonstrations of the how to apply network thinking to solve real world problems. In the Smart Campus example, Dr. Ram has looked into the interactive behaviors of students by tracking their campus card usage. That data might not generate much meaning when analyzed with traditional data analysis techniques. With network thinking, we can dig deeper into the data set to incorporate temporal and special variables. We can even compare their interactive behaviors with their grades. Dr. Ram’s team was able to build a predictive model to predict the college dropout rate and how likely a student will drop out in the future. As you can see, network thinking is extremely powerful. The concept is not just limited to data analysis and we can use network thinking to make daily life decisions, too. Here’s a great TEDx talk in which Dr. Ram explains her idea of creating a smarter world with big data and network thinking.

REFERENCE: https://edge.org/conversation/albert_l_szl_barab_si-thinking-in-network-terms